WebKitGTK+ hackfest \o/

By kov

It’s been a couple days since I returned from this year’s WebKitGTK+ hackfest in A Coruña, Spain. The weather was very nice, not too cold and not too rainy, we had great food, great drinks and I got to meet new people, and hang out with old friends, which is always great!

I think this was a very productive hackfest, and as usual a very well organized one! Thanks to the GNOME Foundation for the travel sponsorship, to our friends at Igalia for doing an awesome job at making it happen, and to Collabora for sponsoring it and granting me the time to go there! We got a lot done, and although, as usual, our goals list had many items not crossed, we did cross a few very important ones. I took part in discussions about the new WebKit2 APIs, got to know the new design for GNOME’s Web application, which looks great, discussed about Accelerated Compositing along with Joone, Alex, Nayan and Martin Robinson, hacked libsoup a bit to port the multipart/x-mixed-replace patch I wrote to the awesome gio-based infrastructure Dan Winship is building, and some random misc.

The biggest chunk of time, though, ended up being devoted to a very uninteresting (to outsiders, at least), but very important task: making it possible to more easily reproduce our test results. TL;DR? We made our bots’ and development builds use jhbuild to automatically install dependencies; if you’re using tarballs, don’t worry, your usual autogen/configure/make/make install have not been touched. Now to the more verbose version!

The need

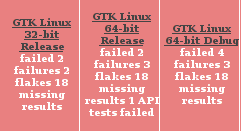

For a couple years now we have supported an increasingly complex and very demanding automated testing infrastructure. We have three buildbot slaves, one provided by Collabora (which I maintain), and two provided by Igalia (maintained by their WebKitGTK+ folks). Those bots build as many check ins as possible with 3 different configurations: 32 bits release, 64 bits release, and 64 bits debug.

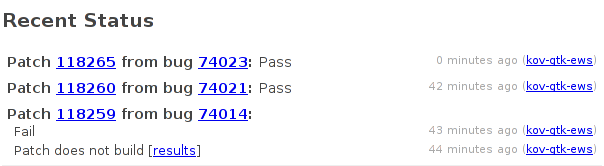

In addition to those, we have another bot called the EWS, or Early Warning System. There are two of those at this moment: one VM provided by Collabora and my desktop, provided by myself. These bots build every patch uploaded to the bugzilla, and report build failures or passes (you can see the green bubbles). They are very important to our development process because if the patch causes a build failure for our port people can often know that before landing, and try fixes by uploading them to bugzilla instead of doing additional commits. And people are usually very receptive to waiting for EWS output and acting on it, except when they take way too long. You can have an idea of what the life of an EWS bot looks like by looking at the recent status for the WebKitGTK+ bots.

Maintaining all of those bots is at times a rather daunting task. The tests require a very specific set of packages, fonts, themes and icons to always report the same size for objects in a render. Upgrades, for instance, had to be synchronized, and usually involve generating new baselines for a large number of tests. You can see in these instructions, for instance, how strict the environment requirements are – yes, we need specific versions of fonts, because they often cause layouts to change in size! At one point we had tests fail after a compiler upgrade, which made rounding act a bit different!

So stability was a very important aspect of maintaining these bots. All of them have the same version of Debian, and most of the packages are pinned to the same version. On the other hand, and in direct contradition to the stability requirement, we often require bleeding edge versions of some libraries we rely on, such as libsoup. Since we started pushing WebKitGTK+ to be libsoup-only, its own progress has been pretty much driven by WebKitGTK+’s requirements, and Dan Winship has made it possible to make our soup backend much, much simpler and way more featureful. That meant, though, requiring very recent versions of soup.

To top it off, for anyone not running Debian testing and tracking the exact same versions of packages as the bots it was virtually impossible to get the tests to pass, which made it very difficult for even ourselves to make sure all patches were still passing before committing something. Wow, what a mess.

The explosion^Wsolution

So a few weeks back Martin Robinson came up with a proposed solution, which, as he says, is the “nuclear bomb” solution. We would have a jhbuild environment which would build and install all of the dependencies necessary for reproducing the test expectations the bots have. So over the first three days of the hackfest Martin and myself hacked away in building scripts, buildmaster integration, a jhbuild configuration, a jhbuild modules file, setting up tarballs, and wiring it all in a way that makes it convenient for the contributors to get along with. You’ll notice that our buildslaves now have a step just before compiling called “updated gtk dependencies” (gtk is the name we use for our port in the context of WebKit), which runs jhbuild to install any new dependencies or version bumps we added. You can also see that those instructions I mentioned above became a tad simpler.

It took us way more time than we thought for the dust to settle, but it eventually began to. The great thing of doing it during the hackfest was that we could find and fix issues with weird configurations on the spot! Oh, you build with AR_FLAGS=cruT and something doesn’t like it? OK, we fix it so that the jhbuild modules are not affected by that variable. Oh, turns out we missed a dependency, no problem, we add it to the modules file or install them on the bots, and then document the dependency. I set up a very clean chroot which we could use for trying out changes so as to not disrupt the tree too much for the other hackfest participants, and I think overall we did good.

The aftermath

By the time we were done our colleagues who ran other distributions such as Fedora were already being able to get a substantial improvements to the number of tests passing, and so did we! Also, the ability to seamlessly upgrade all the bots with a simple commit made it possible for us to very easily land a change that required a very recent (as in unreleased) version of soup which made our networking backend way simpler. All that red looks great, doesn’t it? And we aren’t done yet, we’ll certainly be making more tweaks to this infrastructure to make it more transparent and more helpful to the users (contributors and other people interested in running the tests).

If you’ve been hit by the instability we caused, sorry about that, poke mrobinson or myself in the #webkitgtk+ IRC channel on FreeNode, and we’ll help you out or fix any issues. If you haven’t, we hope you enjoy all the goodness that a reproducible testing suite has to offer! That’s it for now, folks, I’ll have more to report on follow-up work started at the hackfest soon enough, hopefully =).